Here’s a script that considers complete SQL commands and splits the file into chunks of less than 30 MB while keeping entire SQL commands together.

Key Points:

- SQL Command Buffer: The script uses a buffer (

sql_buffer) to accumulate lines of SQL commands. It only writes to the file when it detects the end of a command (a line ending with a semicolon;). - File Size Check: Before writing the command to the file, it checks if adding the command would exceed the 30 MB limit. If so, it closes the current file and starts a new one.

- End of SQL Command: The script assumes that an SQL command ends with a semicolon (

;). This is common, but if your SQL commands have different terminators or delimiters, you might need to adjust the logic accordingly. - Complete Commands: By buffering lines until a full command is detected, the script ensures that commands are not split across files.

- Creating Output Directory: The script checks if the output directory exists and creates it if necessary.

import os

# Path to the large SQL file

input_file = r"sql file path"

# Path to the directory where split files will be saved (make sure this path exists)

output_dir = r"output directory path "

# Create the output directory if it doesn't exist

if not os.path.exists(output_dir):

os.makedirs(output_dir)

# Define the size limit for each split file (30 MB in bytes)

MAX_FILE_SIZE = 30 * 1024 * 1024 # 30 MB

def split_sql_file(input_file, output_dir, max_size):

# Open the large SQL file

with open(input_file, 'r', encoding='utf-8', errors='ignore') as sql_file:

current_file_size = 0

part_number = 1

output_file_name = os.path.join(output_dir, f"split_part_{part_number}.sql")

output_file = open(output_file_name, 'w', encoding='utf-8')

# Initialize a buffer to store SQL commands

sql_buffer = []

# Read the large file line by line

for line in sql_file:

# Add the line to the buffer

sql_buffer.append(line)

# Check if the line ends with a semicolon (assume it's the end of an SQL command)

if line.strip().endswith(';'):

# Join the buffer into a single SQL command

sql_command = ''.join(sql_buffer)

line_size = len(sql_command.encode('utf-8'))

# If adding this command exceeds the file size limit, close the current file and open a new one

if current_file_size + line_size > max_size:

output_file.close() # Close the current file

part_number += 1 # Increment the part number

output_file_name = os.path.join(output_dir, f"split_part_{part_number}.sql") # New file name with directory

output_file = open(output_file_name, 'w', encoding='utf-8') # Open new file

current_file_size = 0 # Reset the current file size

# Write the SQL command to the current file

output_file.write(sql_command)

current_file_size += line_size # Update the current file size

# Clear the buffer for the next command

sql_buffer = []

# Close the last output file

output_file.close()

print(f"Splitting complete. Created {part_number} files in {output_dir}")

# Call the function to split the SQL file

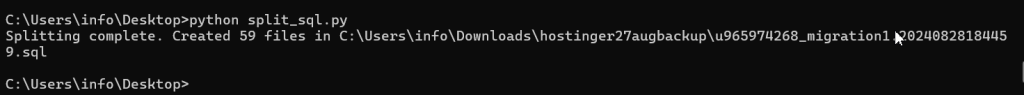

split_sql_file(input_file, output_dir, MAX_FILE_SIZE)You can run the code in command prompt and output will be like this

o ensure that SQL queries remain complete within each file, you need to handle the SQL statements carefully, so they don’t get cut off in the middle of a statement. A better approach is to split the file based on SQL commands and ensure that each file contains a complete set of SQL statements.